Google's new 10-shade skin tone scale to boost inclusivity, cut AI bias

Follow us Now on Telegram ! Get daily 10 - 12 important updates on Business, Finance and Investment. Join our Telegram Channel

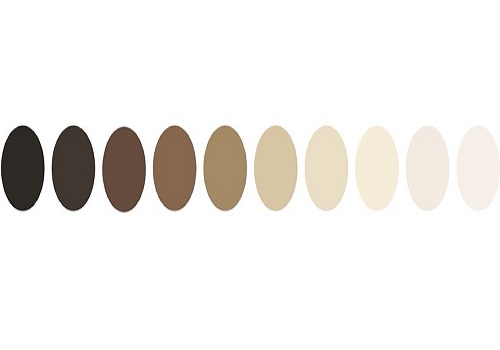

Tech giant Google has launched a novel 10-shade skin tone that will be incorporated into its products over the coming months to boost inclusivity and to reduce Artificial Intelligence (AI) bias.

In a blogpost on Wednesday, Google said it designed the Monk Skin Tone (MST) scale, in partnership with Harvard professor and sociologist Dr. Ellis Monk, to make it easy-to-use for development and evaluation of technology while representing a broader range of skin tones.

Skin tone plays a key role in how people experience and are treated in the world, and even factors into how they interact with technologies. Studies have shown that products built using AI and Machine Learning (ML) technologies can perpetuate unfair biases and not work well for people with darker skin tones.

"We're openly releasing the scale so anyone can use it for research and product development. Our goal is for the scale to support inclusive products and research across the industry - we see this as a chance to share, learn and evolve our work with the help of others," Tulsee Doshi, Head of Product for Responsible AI and Product Inclusion in Search, in a blogpost on Wednesday.

"Updating our approach to skin tone can help us better understand representation in imagery, as well as evaluate whether a product or feature works well across a range of skin tones," Doshi said.

The feature could especially be important for computer vision, a type of AI that allows computers to see and understand images. When not built and tested intentionally to include a broad range of skin-tones, computer vision systems have been found to not perform as well for people with darker skin.

"The MST Scale will help us and the tech industry at large build more representative datasets so we can train and evaluate AI models for fairness, resulting in features and products that work better for everyone - of all skin tones," Doshi said.

Every day, millions of people search the web expecting to find images that reflect their specific needs. For example, when people search for makeup related queries in Google Images, one can see an option to further refine results by skin tone.

"In our research, we found that a lot of the time people feel they're lumped into racial categories, but there's all this heterogeneity with ethnic and racial categories," Dr Monk said.

"And many methods of categorisation, including past skin tone scales, don't pay attention to this diversity. That's where a lack of representation can happenawe need to fine-tune the way we measure things, so people feel represented," he added.

320-x-100_uti_gold.jpg" alt="Advertisement">

320-x-100_uti_gold.jpg" alt="Advertisement">